Artificial Intelligence comprises a series of powerful tools developed by humans. Advances in this field require the contribution of a considerable number of experts. As algorithms become more autonomous, there is a risk of a decrease in the skills of professionals and a deficient implementation of AI, failing to provide the expected benefits to society.

Artificial Intelligence is created by humans

1. Introducción

Artificial Intelligence has been created by humans and experts in this field will increasingly continue to be required in the future. There is no magic to AI (as commonly viewed by many) but rather a powerful series of tools created to fulfil and automatize some of the tasks performed by individuals and simulate human intelligence.

The increasing volume of data managed by public administrations, private companies and institutions, together with the competitive advantage it represents in a range of fields, has brought AI to our lives, making it systemic in the programming of software.

Rhetoric around the benefits of artificial intelligence has created a hype of magnified expectations in companies and a certain discomfort in the public, generating somehow a feeling of anxiety in both.

Workers feel AI could take their jobs or make them irrelevant, companies believe their business could disappear in the future unless they immediately invest in AI and common people fear a future where AI takes over, as frequently seen on films.

The return on investment expected by big tech companies and their market approach after great amounts of money have been displayed have contributed importantly to the creation of this hype around AI:

Image 1. Market Maturity and Expectations, 2022

May 2022. Iñaki Pinillos. Artificial Intelligence is created by humans.

Although these over optimistic expectations exist, we must not fail to understand the importance of AI, mainly the disruption to the way algorithms are being programmed by making them far more powerful, flexible and adaptive than traditional ones.

In that sense and paraphrasing the chemical producer BASF’s: ‘AI does not make a lot of the software you buy; it makes a lot of the software you buy better’.[1]

In the digitalization era, it is clearly perceived the distinct contribution of AI to companies’ competitiveness and performance and the uncountable number of powerful applications offered by AI in every field of social advance.

Therefore, a careful approach must be adopted to define the right strategy for the successful development in AI, considering the full extent of the intrinsic challenges AI is facing, including ethics, lack of experts, algorithm reversion, explainability, knowledge in the subject in the rest of the workers, and literacy for common people.

2. Why now?

Artificial intelligence techniques are not new, on the contrary, they have been present for decades remaining somehow unnoticed until today.

After World War II, there was a rise in the interest of developing intelligent machines although this idea of thinking machines apparently dates back to ancient Greece.

Among the main events and milestones in the history of AI, the following can be highlighted:

- 1930: Kurt Gödel publishes “The completeness of the axioms of the functional calculus of logic”. The first of his two “Incompleteness Theorems”.

- 1930: Alonzo Church discloses “Foundations of Mathematics” introducing the concept of lambda calculus, fundamental in the development of computer science and programming languages.

- 1931: Kurt Gödel’s abstract “Some metamathematical results on completeness and consistency”, completes the series of “Incompleteness Theorems”, setting the bases for the definition of the limits in computation.

- 1936: Alonzo Church releases “An Unsolvable Problem of Elementary Number Theory” first encoded data in the lambda calculus, showing computation limits for certain kind of functions.

- 1936: Alan Turing’s “On Computable Numbers”, describes what came to be called the “Turing Machine”, setting the limits of the decision problems that can be solved by algorithms.

- 1950: Alan Turing publishes “Computing Machinery and Intelligence”, introducing the debated Turing test as a way of comparing human and machine behaviour when performing a determined task.

- 1956: John McCarthy coins the term ‘Artificial Intelligence’ and Allen Newel, J.C. Shaw and Herbert Simon create the first AI software program.

- 1957: Ray Solomonoff’s “An Inductive Inference Machine” becomes the first paper written on machine learning.

- 1958: Frank Rosenblatt builds the first computer that learns on trial and error based on IBM 704.

- 1974: Richard Greenblatt’s AIM paper by MIT, “The LISP Machine” deals with hardware design dependency on algorithms and their efficiency.

- 1996: IBM’s playing expert system, Deep Blue, becomes the first machine to win a chess game against a reigning world champion (Gary Kasparov) under regular time controls. Announcing the capacities of AI on spite of being a unique purpose-built supercomputer.

Taking all these landmarks into account, what has represented the turning point for AI in recent years, what has transformed AI in a universally popular science in so many aspects?

We cannot name one single isolated advance paving the way for artificial intelligence. On the contrary, the confluence of maturity in different technologies has made this possible. Among them, the rise and availability of stored data, an exponential increase of computing and storage capacity and the advancement in algorithms’ efficiency.

The amount of data that can be handled with the tools and software being used up to now has considerably increased, there is available hardware enabling us to capture data, store it and run algorithms to extract valuable information and, more importantly, data can be processed at low cost and in a reasonable period, even real time for many applications.

This means live solutions and services can be provided from data being generated at the very same moment.

After decades of existence and for the past few years, AI has started to have a real impact on our lives thanks to the evolution of numerous enabling areas in different sectors.

2.1. Rise in stored and interchanged data

The exponential rise in the use of the Internet, the improvement in telecommunication networks and the easiness of capturing data thanks to the evolution of the sensor’s market and IoT technologies have exponentially increased the amount of stored and interchanged data. This has made enough datasets available for algorithms to be trained in a proper way.

In addition, enabling different ways for machines to understand and interact with their environment by installing new families of sensors increases the possibilities to solve new problems and contributes as well to the amount of generated data (at the same time as multimedia and other related files).

Being asked in 2011, Eric Schmidt, Google’s CEO at that time, revealed that humanity had only generated around 5 Exabytes by 2003, while the same amount of information was then generated every two days. Estimating that, by 2007, 295 Exabytes had already been generated reaching the quantity of 600 Exabytes by 2011 and 44 Zettabytes in 2020. In the meantime, the forecast for 2025 before the pandemic was 463 Exabytes being generated per day.

In the same way, paying attention to data flows, although difficult to estimate, the trend is clear, with traffic in 2022 expected to exceed all Internet traffic up to 2016 as a result of the pandemic impact on this parameter. In this regard, monthly global data traffic is predicted to exceed 700 Exabytes within next 5 years.[2]

Applying AI algorithms to this large amount of data collected allows unveiling unknown knowledge and discovering helpful correlations that could not have been inferred otherwise.

Coming to this point, it should be mentioned that the fact of having enormous quantity of data does not ensure itself that we have the quality we need for AI to succeed (although it increases the possibilities). Although big data and good data should come hand in hand, sometimes it is not understood that way.

This is also a challenge that should be considered as information and useful information in this field is not the same.

2.2. Exponential increase of computing and storage hardware capacity

The first computing machines were big, expensive, difficult to maintain and with a high-power consumption. They could, nevertheless, perform high speed numerical calculations, and this allowed the scientific community to accomplish projects that could not have been possible without them.

Since the early 1960’s, when IBM rolled out the IMB 7030 Stretch, and Sperry Rand introduced the UNIVAC LARC, machines based on floating point operations have kept their progress and have been rated according to the amount of that type of operations being delivered per second.

From that moment, the number of processors present in a supercomputer (see Moore’s law) have kept growing at the same pace as their capacity, whereas power consumption and size have decreased. As a result, processor manufacturing reached the economy of scale, making possible for families and businesses to afford computers. On the other hand, it made companies specialised in this kind of machines turn to general purpose processors and avoid investments in expensive specific ones, paving the way for what we today know as High-Performance Computing.

This, altogether with the creation of Linux, made possible to have HPC clusters at a reasonable cost. In fact, by 2005, the Top500 computer list was already dominated (70%) by clusters of x86 servers.[3]

To put today’s speeds into perspective, IMB 7030, introduced in 1961, was capable of 2 Megaflops; Cray-1, brought out in 1976, was able to manage a speed of around 160 Megaflops[4] while Frontier’s speed is 1,102.00 Petaflops[5] achieved by 8,730,112 cores.

In the next years several HPC centres are expected to reach the Exaescale worldwide, not forgetting the improvement and use of Quantum Computing, which represents a change of paradigm, going from bits reflecting states between 2 fixed positions (0 or 1) to qubits that describe more accurately the uncertainty observed in many areas of the Universe. Quantum Computing will be able to replicate and predict some of the mystifying problems from nature, whereas current algorithms will be surpassed by its computing capacity in other areas, like cryptography or cybersecurity. As an example, they will solve problems of random number generation in minutes, something that would have implied hundred millions of years with HPC computers.

If we compare, it can be considered that a 30-qubits computer equals the processing power of a conventional HPC cluster that could run Teraflops[6]. Very recently, IBM announced the Eagle processor, with a significant jump in qubit count to 127-qubits.

According to IBM’s expectations, the company will release a 1,121-qubit chip before the end of 2023.

Nevertheless, full performance of Quantum Computing is not expected until a capacity of around 1,000,000-qubits is reached.

Concerning storage, punch cards were used from the 18th century up to the mid-1980s although they were gradually replaced by magnetic storage as the primary means for data storage since the 1960s. From that moment and up to now, storage systems have remarkably improved in capacity, energy consumption (except for the cards and tapes), size, access speed and cost per bit. DNA data storage is expected to be the next revolution in this regard.

2.3. Advancement in algorithms

As research has revealed, a high percentage of present algorithms have promisingly evolved, becoming more efficient and, in some cases, generating additional benefits from their performance than those obtained from hardware evolution. This is acquiring even greater importance as Moore’s law seems to be coming to an end.

In this regard, programmers are struggling to build systems able to program AI algorithms without human intervention with a certain succeed rate in some cases. However, this is not exempt from risks for the evolution of AI, as AI needs human expertise to improve, not to forget the debate around a regulatory framework on the need of human supervision for technical and ethical matters.

In any case, the fact that algorithms become more efficient and somehow code themselves up to some extension, should not be seen as apocalyptic since this has been quite common in programming history with more automation being achieved in order to gain efficiency, both in program writing and in ution.

3. Is AI Intelligent in a Human Way?

Artificial Intelligence nowadays (and if the current trend does not change) clearly does not equal what it is commonly understood as full human intelligence although it exceeds brain capacity in many aspects (ability to manage large volumes of information and speed of big data processing for instance) in the same way machines have eclipsed our strength and capacity to handle heavy loads or ute strenuous tasks. Therefore, we could assert that machines can mimic some human behaviors but are far from replicating all of them and even farther from concentrating these in one single machine.

With no intention of raising any debate, and at the risk of over-simplifying a really serious matter, we could imagine a situation where a person working in a factory, trained to move any kind of object, of any size, from one place to another, is suddenly required to do the same but with small animals that are not standing. How is that individual’s intelligence assessed if he or she gets stuck in the performance of the task by this variable slightly changing the context?

AI potential is limitless and so is the need to keep on working and developing it as a way to obtain human augmentation in the same manner that has been experienced with other technologies, both physically and mentally (take calculators, spreadsheets, or storage) but at an exponentially much faster pace and with a massive number of applications disrupting every aspect of our lives.

Considering this, and in order to exploit its full potential in the future, technology leaders must demystify AI, focusing on feasible tasks and on research. We should achieve new developments in how machines interact with the environment, perceive it, and solve problems but not trying to exceed their current capacities, avoiding a feeling of failure and lack of capacity in end users. At the same time, it is even more important to prevent fears, carefully considering ethics in every aspect related to technology and not letting imagination run free about how things could be like if AI was something else. Emotional responses to AI are a real risk and could delay an early adoption and have a negative impact in countries’ competitiveness and social advances.

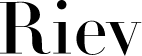

The following Gartner® image about AI skills can help to see some of the terminology used in this domain and make the state of the art a little bit clearer:

Image 2. Gartner AI Techniques Framework

March 2022. Brethenoux, E. (Gartner). What Is Artificial Intelligence? Ignore the Hype; Here’s Where to Start.

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and

internationally and is used herein with permission. All rights reserved.

4. Is AI Human Dependent?

No matter what we read, imagine or are being told, what we today refer to as Artificial Intelligence, that could be far better understood as machine intelligence, is created by humans, and depends on experts. Not only for the design of algorithms, but also for their training, ution and for the creation of more powerful hardware based on different technologies that allow processing large amounts of data in a timely and cost-effective manner. Also in the quality of data an advance is needed, improving sensors, communication networks and data bases.

With this in mind, it is difficult to imagine a future with a fully autonomous Artificial Intelligence that will require no human intervention. Not to say that with current focus and if synthetic biology does not change it, all the machines and devices involved require an important amount of power to run, mainly electricity, and, even on this, AI is completely human dependent.

That being said, and at this point, it is necessary to observe that this must not be understood as a manner to give AI less credit than deserved. On the contrary, a realistic approach could be positive in order to implement AI in the future in the most effective way to favour individuals.

As already mentioned, AI, as the powerful kit of tools it is, disrupts many aspects of our lives by allowing people to take better, faster, and more accurate decisions, even automatizing them thanks to the ability to process and analyse large amounts of data that a human brain cannot handle.

Last but not least, AI does not only require of technical experts and STEM professions but also professionals from human sciences with the responsibility of training programmers and algorithms, shaping the future of AI. All these professionals will ensure a trustworthy AI which is always used for the right purpose and in the proper manner to prevent social division, assuring its robustness, privacy, explainability, accountability and ethics.

Image 3. AI jobs and skills

May 2022. Iñaki Pinillos. Artificial Intelligence is created by humans.

5. Are Humans AI Dependent?

AI does not only represent a source of innovation and differentiation, but it is also fundamental to the future economic and social development of countries. This article has previously analysed the dependence of AI on humans, but in a future that cannot be understood without digitalization, the importance of making the most of the available data and implementing better software has proved to be crucial. And this is exactly what AI can offer, the right tools to collect valuable data and to make algorithms better.

So, in some manner, much of the progress achieved in different fields (personalised medicine, food production, fight against poverty, sustainability, reducing inequality) depends and relies on AI. Not being totally dependent we must admit that our society’s current well-being could not be the same without the proper use and improvement of these powerful techniques.

5.1. Social development and AI

Thanks to AI, many of the most important current social issues can be handled (also at the risk of increasing some social divides if the use of it is not collective and affordable).

Hundreds of cases in the UN’s sustainable-development goals could be discussed here, potentially helping millions of people in emerging and developed countries. Not only by helping research on those fields, but also enhancing current knowledge by AI’s power to analyse data, language, text and images.

Some examples can be observed in several large domains:

- Health: Personalised medicine (rare disease diagnose, cancer diagnose and treatment); wearables (early disease detection thanks to AI embedded devices, being diabetes an example); pandemic response.

- Hunger: Analysis of soils, weather and plants genetics for better crops.

- Education: Adaptative learning (tailoring content to student’s abilities and performance).

- Climate change: fire detection, illegal logging or deforestation thanks to satellite imagery; better sorting of recyclable materials; energy management.

- Biodiversity: powered image classification and object detection to track illegal wildlife trade.

- Equality and inclusion: Analysis of interactions in social networks to detect messages based on race, sexual orientation, religion, citizenship and disabilities; identification of individuals through mobile phone for inclusive banking access based on sound and voice pattern.

In any case, limited access to technology in emerging economies represents a major obstacle in the race towards an inclusive use of AI that fulfils the created expectations.

5.2. Economic competitiveness and AI

AI can bring a wide range of economic benefits across the entire spectrum of industries and become a powerful engine for economy.

The use of AI in companies and public could potentially generate additional economic activity of above $13 trillion globally by 2030, with an additional GDP growth of around 1.2 percent per year. These figures clearly surpass those derived from the introduction of steam engines, the impact of robots or the spread of IT.[7]

On the other hand, business leaders tend to overestimate the impact of AI and disregard its complexity although global expenditure in 2022 is forecasted to exceed US$79 billion.

If from an economic perspective we want to obtain the outmost from AI techniques, we should eliminate any obstacle hindering its implementation, assessing value and providing literacy to professionals and end users (since AI will have a transformative effect both in workers and consumers). Only in that way the right strategy to grow and to obtain real benefits will be achieved.

We must admit that our psychological process of acceptance, behaviours and trust move at a slower pace than digital tools, and as such, it clearly exists a gap nowadays between what technology offers and what we are ready to accept. Therefore, investments in technical areas are not the only need but also in the effort for a cultural change.

At the same time, public administrations should focus on maintaining both, innovation and competitiveness of their companies while protecting workers, consumers and citizens from harm.

In any case it is clear that companies are competing on being differentiated by the use of AI tools while discovering they have much less data than needed. As a result, and with the purpose of contributing to a solution, shared data spaces as the project Gaia-X in Europe have been introduced or, more recently, the improvement of algorithms with the goal of obtaining synthetic data.

Other challenges faced by several countries at the moment are related to the performance gap between front-runners and nonadopters and so, policies for the use of AI in SMBs are strongly emerging as they are considered an important part of the economy.

Anyhow, AI strategies are becoming imperative for business competitiveness, improving or making available the following elements:

- Enhancement of products and services.

- Optimization of internal business operations.

- Better decision-making.

- Automation to free up workers for more creative tasks.

- Optimization of external processes.

- Creation of new products.

- Entering new markets and market analysis.

- Scarce knowledge capture and application.

- Business automation to reduce headcount and cost analytics.

- Stimulation of consumer demand.

6. Who will Enhance AI if we Run out of Experts?

Without AI professionals AI would not exist or improve.

AI can automatize multiple tasks, but it is still human dependent in many ways, not only for its current performance, but also for its future development as well as from a technical and social perspective. People are making AI smarter at the same time AI is reinforcing human capacity. They are also making AI more ethical. Multiple researchers (hundreds of thousands) publish articles every year, being the US and China followed by UK, Australia and Canada the countries with the highest number of high-impact researchers.

With no intention of being fully precise or holding any debate, the whole AI ecosystem is composed by different areas of knowledge and there is a demand for professionals in all of them:

- Hardware engineers: Research and development in the evolution of hardware to provide enough algorithms for computing capacity to be deployed. Including the design of new chips, new architectures and quantum computing.

- Infrastructure and Cloud engineers: Providing and configuring hardware and operating systems where AI can run efficiently.

- AI researchers: Creating new kinds of AI algorithms and systems expanding AI capabilities.

- Data operators: Tagging data and cleaning data in a proper way.

- Data Engineers: Building modern structures for AI models development.

- Data stewards: Being responsible for data organization as well as for ensuring compliance with internal and external regulations.

- Data Scientists or AI Engineers: Analysing and extracting valuable information from data. They represent the link between fundamental research and the real world. They are differentiated from software engineers by their orientation to data. They study the latest techniques of machine learning and apply them to datasets.

- Data philosophers and sociologists: They endow human and social meaning to AI products.

- Software developers: Architecting and coding AI systems.

- Business experts or AI translators: Understanding both sides, AI and business and bridging the divide between technical staff and operations.

- User experience designers: Making AI systems user friendly.

- AI operators: Operating and monitoring AI algorithms.

This illustrates not only the skills needed for AI development, but also the large number of human resources needed in AI deployment and research to fulfil the expectations created and perform its role in economy and social advancement. This is a challenge that is partly attempted to be solved decreasing human interaction by open-source libraries and more no-code, low-code or high code options in the market.

Although not apparent at the moment, there is a risk in automatizing some key matters or assigning AI workers to less skilled tasks but with a high return of investment. Companies and governments might feel tempted to focus on quick wins and forget about the fundamentals of AI, recruiting highly skilled profiles to handle daily productive tasks in an attempt to sort out the lack of professionals. At the same time, employees who might have a higher performance potential in research tasks, could also feel tempted by attractive salaries linked to more common jobs.

If this happens, AI will not improve at the right pace and will certainly not achieve the importance it is expected to have in the future of humanity.

Although far from happening, it is a risk that the society must not forget, addressing the right importance to research and admitting AI is created by humans and will invariably need them, not only to operate but to grow in capacity and in disciplines.

7. Ethics and Regulation

AI is created by humans and should be used to enhance our lives. Bearing this in mind, and in order to avoid the unsatisfactory result that other technology advances have had in our society in the past years, the use of AI must be regulated with a special consideration to ethics. Many challenges faced globally are related to this aspect and nations have started to act accordingly, with Europe intending to be at the forefront of the ethical implementation of AI.

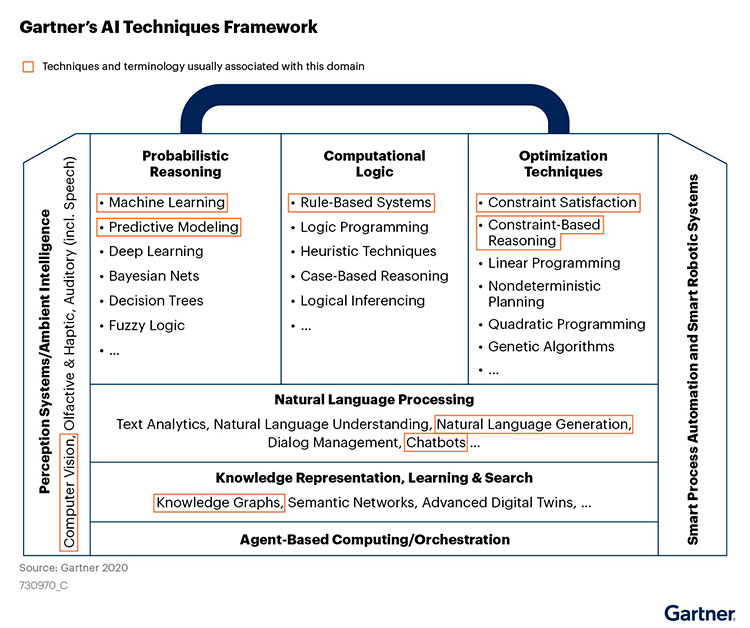

This needs to be considered and solved from different approaches as the following image shows:

Image 4. Overview of the Most Commonly Mentioned Guidelines

December 2020. Buytendijk, F.; Sicular, S.; Brethenoux, E.; Hare, J. (Gartner). AI Ethics: Use 5 Common Guidelines as Your Starting Point. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

AI may be misused in different aspects of life, by authorities, companies and private individuals with access to AI tools and techniques. This risk makes necessary to establish a set of governing rules and ethical principles that prevent harming the individuals who is initially intending to help.

Up to now and due to the rapid pace in the development of software and platforms, digital ethics have only been considered on most occasions ‘a posteriori’. Artificial intelligence must anticipate the possible legal and ethical issues to ensure its successful implementation and adoption.

Augmenting existing inequalities and divides and violating data privacy should be avoided by providing a trustworthy AI which is human-centred, fair, offers explainability, is robust, secure, and accountable and has a special focus on having a positive social impact.

In this regard, several countries, and entities are trying to take the lead in AI regulation from different approaches, with the European Union publishing in April, 2021 its “Regulatory framework proposal on artificial intelligence” that is the “first-ever legal framework on AI, which addresses the risks of AI and positions Europe to play a leading role globally”.[8]

The proposal is a part, together with the updated Coordinated Plan on AI, of a wider AI package and aims to provide AI developers, deployers and users with clear requirements and obligations regarding specific uses of AI while seeking to reduce bureaucracy for business, in particular SMEs.

The new rules are to be applied directly in the same way across all Member States based on a future-proof definition of AI by following a risk-based approach that rates risks from unacceptable risk to high risk, limited risk or minimal risk. Depending on their classification, AI systems could be either banned or subject to different kind of obligations focused on enhancing the security, transparency, and accountability of the applications.

Assuming the proposal regulation is passed, date for compliance is expected at the second half of 2024. Additionally, the EU adopted in February 2022 the proposed regulation on harmonised rules on fair access to and use of data, also known as Data Act that is a key pillar of the European strategy for data. This new measure complements the Data Governance Regulation, clarifying who can create value from data and under which conditions.

At the same time, Europe has recently approved its Digital Services Act to create a safer digital space and protect consumers’ online rights by defining a powerful transparency and accountability framework.

In the meanwhile, China has started by passing the “Internet Information Service Algorithmic Recommendation Management Provisions” effective in March 1, 2022. Having at this moment a narrower perspective than the European Union.

These provisions apply to the use of algorithmic recommendation services making them “observe social morality and ethics, abide by commercial and professional ethics and respect the principles of fairness and justice, openness and transparency, science and reason, and sincerity and trustworthiness”[9].

In further steps, the Ministry of Justice of the People’s Republic of China intends to regulate AI in a national strategy to protect security and social public interests.

In the United States, although there has been, up to now, a fragmented approach to AI regulation, there are also some national initiatives taking place as the “National Artificial Intelligence Initiative” and the “Algorithmic Accountability Act of 2022” that directs to the Federal Trade Commision to create regulations for entities in the use of AI.

Before that, the U.S. Federal Commission has shown in advance its proactivity in aiming for a truthful, fair and equitable use of AI, by preparing companies for future ethical regulatory frameworks.

In any case, the European Union has shown a bigger ambition and a much wider approach in this matter.

Therefore, it is not a longer a question whether AI will be regulated or not, but how this will be done. The challenge lays on designing a framework that does not represent a constraint but an opportunity to foster the use of AI by improving AI literacy, giving response to the mentioned challenges and providing comfort to end users at the same time as securing massive investments for companies. Only this way, and by thoroughly considering the regulatory implications ex-ante, will the most of AI promises be fulfilled in the coming years before its use is globally spread.

On the technical arena, there are also several trends focused on distinct aspects as:

- Synthetic Data: Brand-new data generated using AI tools could minimize the collection of personal sensitive data. This emerging data mirrors real-world data and preserves a certain similarity to it but cannot be traced back to an individual, what makes its use quite efficient and flexible, as it obviates privacy requirements. In the same way, it offers innovative and extreme scenarios for a better training of algorithms reflecting ‘what if’ models, with unplanned disruptions, unexpected events, or planning.

- AI trust and explainability: Rising concerns in end users’ perception can only be lessened by data specialists, legal experts, risk experts, and business stakeholders assessing and validating AI’s outcomes to ensure explainability and accountability. In that sense, several companies working on liability checks and governance have emerged in the last years, with the support from public policies. Some of the techniques are based on the attempt to identify which parts of input data a trained model relies on most to make its predictions, whilst generalized additive models use single-feature models interactions between features and allow experts to understand each one more easily.

- Transfer learning: Improving how a model is trained to apply learning from some specific task to different tasks.

- Bias risk study: That has a greater cultural and social component than a technical one, and as such, it cannot be handled with technology advances. It is matter of key importance that has raised broad social concerns and is challenging but fundamental for the implementation of an ethical AI. Non-profit organizations, private companies, public authorities, academic institutions, and individual experts are already devoting an important amount of time and funds to confront and study the matter.

8. Conclusions

In what refers to AI, it has been difficult in the last years to find a neutral position.

Researchers have felt quite satisfied with their discoveries and advances; big technological corporations have invested large amounts of money in solutions’ development and have pushed to obtain return of investment and sales; companies in general have felt the pressure to use AI in their processes, products and services and have felt the anxiety of not knowing how to do it; workers have felt the threat they would be replaced by AI instead of being given the proper tools for an optimal task approach; public administrations and governments found themselves stuck in the middle, since, on the one hand, they need to adopt digital technologies quickly, as they are somehow competing against large companies that are controlling individuals and replacing their responsibilities and applying their own ethics whereas on the other hand, they need to define regulatory frameworks on any new technology… In the meantime, the rest of the humans have imagined a future taken over by machines.

As in other fields with an important underlying economic interest, and, considering the way the world has changed in the last decades thanks to digitalization, there has been a considerable fuss about how AI is disrupting our lives, as many of the stakeholders feel lost, insecure, and not aware of the real impact of the subject they are dealing with.

AI is a powerful set of techniques that has been present for some time, but that has found in the last years the right conditions to take off and the right partners to do it successfully (large datasets, high performance computing and storage capacity among others). This has led to an improvement in algorithms and a scale in the use of AI for software development, making software’s response dynamic in changing conditions as opposed to static outputs obtained before its use.

Although AI is far from being the magic solution is sometimes marketed as, it must not be underestimated since figures on the social and economic impact in the coming years speak by themselves. The social benefits related to each of the items in the United Nations’ sustainable-development goals are evident, from health and hunger to equality and inclusion. The implementation of AI in fields like personalised medicine, overcoming of the digital divide and sustainable agriculture are promising with many benefits already achieved.

And it is now when it should made be clear that AI is created by humans and for humans. Without the help and intervention of experts and without the acceptance of individuals, AI will not have the expected impact ($13 trillion globally by 2030 added to an important social impact) nor will it represent the game changer is set to be.

AI is not intelligent in a human way, but it replicates some human behaviours successfully and it incredibly surpasses our capacity to compute and process data, so we must be aware of the importance of research in this field. Careful attention must be paid to the implications of automatization of AI algorithm production, as it could lead experts to lose skills and focus on solutions to daily problems, tempted by high salaries, rather than devoting their time and expertise to improve and make the most of AI.

In addition to leveraging current skills and promoting STEM’s vocations to ensure the adequate number of professionals required, governments must fund research properly, as the future nation’s competitiveness and individual’s wellbeing are at stake. As some illustrative data, US and China are expected to benefit of a 70% of global GDP growth thanks to AI up to 2030.

Last but not least, public administrations must face any intrinsic risk that the use of AI may imply. AI is created by humans and its use must benefit humankind. Therefore, the possibility of misuse for the benefit of a few cannot be afforded. Ethical aspects must be regulated, and an adequate regulatory framework must be provided. The possibility of doing this ex-ante can overcome the hurdles that social networks, commercial platforms, and other digital tools have experienced in the last years.

Nations are already working in this regard, leveraging digital and AI literacy in professionals and public, regulating digital rights, requiring of explainability in AI models, securing human intervention and supervision, protecting data privacy, and enhancing the social use of artificial intelligence techniques.

The future of humanity is expected to be better thanks to AI, but to achieve this, a joint action is needed and private-public cooperation with common goals, principles, and ethics required.

9. References

Bughin, J.; Seong, J.; Manyika, J.; Chui, M.; Joshi, R. (2018). Notes from the AI frontier: Modeling the impact of AI on the world economy. McKinsey Global Institute.

Chui, M.; Harrysson, M.; Manyika, J.; Roberts, R.; Chung, R.; Nel, P.; van Heteren, A. (2018). Applying artificial intelligence for social good. McKinsey Global Institute.

Chui, M.; Harrysson, M.; Manyika, J.; Roberts, R.; Chung, R.; Nel, P.; van Heteren, A. (2018). Notes from the AI frontier. Applying AI for social good. McKinsey Global Institute.

European Union. (2021). Proposal for a regulation of the European parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts. European Commission.

Hupfer, S. (2020. Talent and workforce effects in the age of AI. Insights from Deloitte’s State of AI in the Enterprise, 2nd Edition Survey. Deloitte.

McCarthy, J. (2007). What is Artificial Intelligence? Stanford University.

European Union (2022). European Data Act. European Commission. Consulta, 24 noviembre 2022.

U.S. Congress (2022). H.R.6580-Algorithmic Accountability Act of 2022. Consulta, 24 noviembre 2022.

[1] Brethenoux, E. (2022) What Is Artificial Intelligence? Ignore the Hype; Here’s Where to Start. Gartner “A Campaign for BASF,” The New York Times, 26 October 2004. In almost every brand awareness test, BASF’s circa-1990 North American commercial tagline — “We don’t make a lot of the products you buy. We make a lot of the products you buy better.” — may be considered among the most recognized corporate slogans.

[2] Fajarnes, P.; Cyron, L.; Julsaint, M.; Joe Ko, W.; Riegerl, V.; Skrzypczyk, M.; van Giffen, T.; Sirimanne, S.; Fredriksson, T.; United Nations. Digital Economy Report 2021. Cross-border data flows and development: For whom the data flow.

[7] Bughin, J.; Seong, J. McKinsey Global Institute. (2018) Assessing the Economic Impact of Artificial Intelligence. ITUTrends, Emerging trends in ICTs. Issue Paper No.1.

[9] https://digichina.stanford.edu/work/translation-internet-information-service-algorithmic-recommendation-management-provisions-effective-march-1-2022/

Bughin, J.; Seong, J.; Manyika, J.; Chui, M.; Joshi, R. (2018). Notes from the AI frontier: Modeling the impact of AI on the world economy, McKinsey Global Institute. Chui, M.; Harrysson, M.; Manyika, J.; Roberts, R.; Chung, R.; Nel, P.; van Heteren, A. (2018). Applying artificial intelligence for social good, McKinsey Global Institute.

Chui, M.; Harrysson, M.; Manyika, J.; Roberts, R.; Chung, R.; Nel, P.; van Heteren, A. (2018). Notes from the AI frontier. Applying AI for social good, McKinsey Global Institute.

European Union. (2021) Proposal for a regulation of the European parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts.

Hupfer, S. Talent and workforce effects in the age of AI. Insights from Deloitte’s State of AI in the Enterprise, 2nd Edition Survey

McCarthy, J. (2007) What is Artificial Intelligence? Stanford University.

European Data Act. European Union.

Algorithmic Accountability Act of 2022. U.S. Congress.

SHARE